In this post, we will discuss how to access the Realsense camera sensors on the NVIDIA Jetson Nano using ROS 2 Foxy. However, before we delve into that, let’s take a moment to recap what we have accomplished thus far.

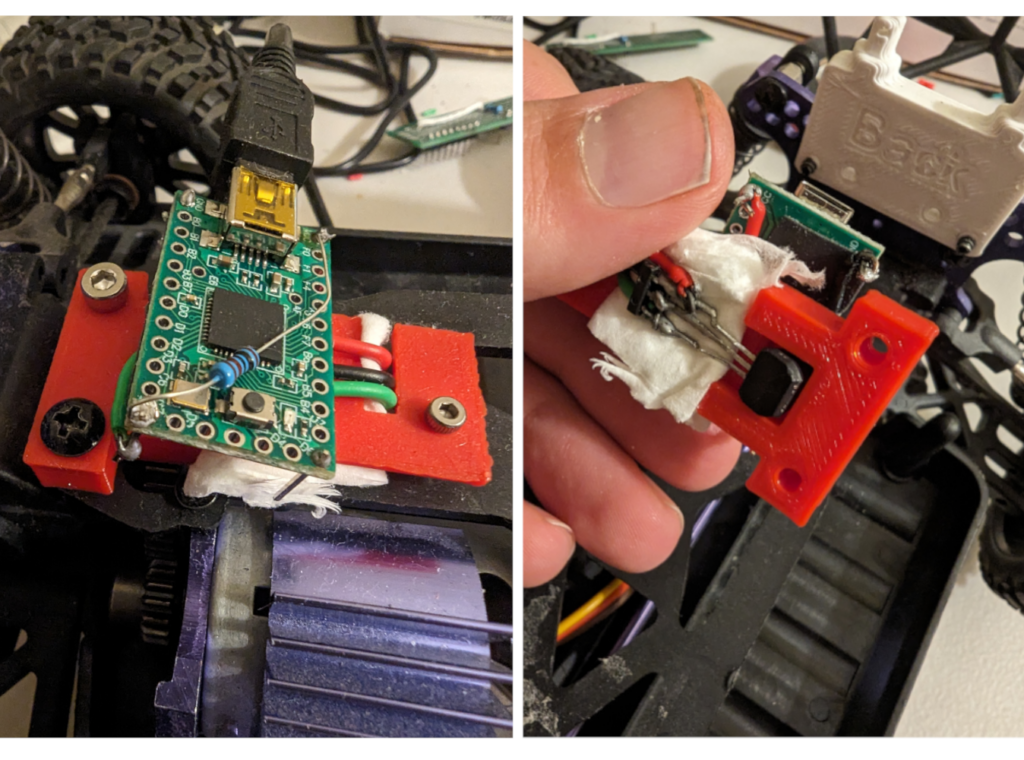

- Introduction to my Jetson based Robot – DonkeyJet

- ROS 2 on Jetson Nano using Docker, we presented the base ROS 2 docker file for this project and briefly touched upon cross-compilation and the docker’s multiplatform support. Up to this point, I have made substantial modifications to the docker image. Fortunately, the docker file mentioned in the post automatically updates from GitHub. The most notable changes include the addition of

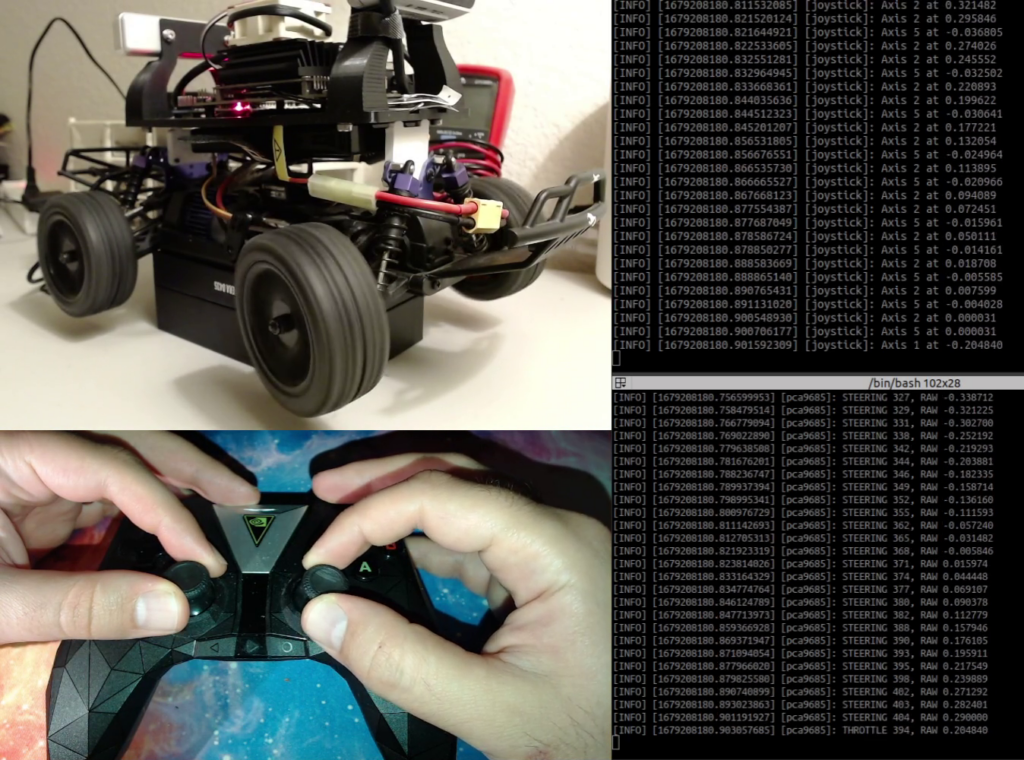

navigation 2,rtabmap_ros, and all the necessary dependencies. Unfortunately, the build time for the images has significantly increased, particularly for the arm64 platform. - Using Bluetooth Controller with ROS 2 on Jetson Nano to learn how to read the Bluetooth controller in ROS 2 and Linux.

- Actuation and PCA9685 with ROS 2 on Jetson Nano We explored how to utilize I2C on embedded Linux. Furthermore, we established a connection between the Joystick node and the actuation node to enable manual control of the robot using a game controller. I made some minor code modifications on GitHub that were not discussed in the blog post.

Hardware Issue

I initially used a combination of D435i and T265 RealSense sensors. However, I encountered a problem where I couldn’t properly access the IMU data from the D435i camera on the Jetson Nano, even though I could retrieve the IMU data on the X86 machine. I conducted extensive testing and reported the issue on the Realsense project on GitHub, but unfortunately, I couldn’t find a solution. After spending a considerable amount of time on this, I decided to switch to the D435 instead, which doesn’t have an IMU. Since the T265 sensor has the same IMU capabilities, I won’t be missing out on the functionality provided by the D435i sensor.

Docker Image for Realsense on NVIDIA Jetson Nano

Let’s start with the Dockerfile. You can find the Dockerfile on the GitHub page of the project

The base image for this image is ros2_base:latest that we built in previous blog posts. And we are also tagging it as ros2_base:latest.

The container contains both the Realsense SDK and the realsense ROS 2 wrapper. However, it is unfortunate that the support for T265 camera in the ROS 2 wrapper has been deprecated. As a result, it is likely that we will not utilize the ROS 2 wrapper and instead develop our own code for T265 camera integration. Alternatively, we can choose to revert to a previous commit before the deprecation commit and use that version, which still supports the T265 camera.

Here is the build command for both ARM and X86 platforms.

docker buildx build \

--platform linux/amd64,linux/arm64 \

-f realsense.Dockerfile \

--build-arg BASE_IMAGE=${REGISTRY}/ros2_base:latest \

-t ${REGISTRY}/ros2_realsense:latest \

--push . Please ensure that the REGISTRY environment variable is defined. If you prefer to use dockerhub as your docker registry, you should define it by running export REGISTRY=<your username on dockerhub> in terminal or adding it to the ~/.bashrc file.

Use the following commands to run the container.

docker pull ${REGISTRY}/ros2_realsense:latest

docker run \

--name ros2_realsense \

--rm \

-it \

--runtime nvidia \

--network host \

--gpus all \

--privileged \

-e DISPLAY \

-v /dev/bus/usb/:/dev/bus/usb/ \

${REGISTRY}/ros2_realsense:latest \

bash If you don’t pass -v /dev/bus/usb/:/dev/bus/usb/, then each time any of Realsense cameras is re-plugged, the container needs to be restarted.

After running the container you should be able to run the Realsense examples. They are all starting with rs- command. The one that I want very useful are the followings:

- The

rs-enumerate-devicescommand provides a comprehensive list of all connected Realsense cameras. It not only displays the supported streams from each camera but also provides information about the supported formats of those streams, firmware versions, and other relevant details. This command is useful for obtaining a detailed overview of the connected Realsense cameras and their capabilities. - The

rs-depthcommand generates an ASCII image from the D435 camera. This feature is particularly beneficial when testing the functionality of the D435 camera remotely through SSH, as it doesn’t require a GUI or monitor. The ASCII image is displayed directly in the terminal, making it convenient for evaluation purposes. - The

rs-posecommand displays the pose output from the T265 camera, very useful for testing the T265. It is important to note that the T265 camera does not have built-in firmware memory. Therefore, when the camera is operated for the first time, the Realsense library uploads the firmware. Thers-posecommand is particularly useful for testing the functionality of the firmware and ensuring that it is present. In case the firmware is not present,rs-posealso takes care of uploading it. However, it is crucial to include the-v /dev/bus/usb/:/dev/bus/usb/parameter in thedocker runcommand. This parameter ensures that the container sees the hardware changes after firmware upload (and camera reboot), allowing the device to be visible to the docker container without requiring a container reboot.

How to Use Realsense SDK

There is a wiki page about how to use the Realsense SKD library here. I will summarize what I have learned so far here. The general form of using the Realsense SDK is as follow. We start with including the required header file.

#include <librealsense2/rs.hpp> The following steps are required for each camera sensor

We need to instantiate a Realsense pipeline as follows.

rs2::pipeline pipe;

Then we need to setup a config object to define the required stream (sensor data) and their formats from the sensor.

rs2::config cfg; cfg.enable_stream(RS2_STREAM_ACCEL); cfg.enable_stream(RS2_STREAM_GYRO); cfg.enable_stream(RS2_STREAM_DEPTH);

For example, here we are asking for acceleration, gyro, and depth data. It would work if we were using the D435i sensor since it would have IMU data. But we should drop the IMU related stream for D435. Enable stream accepts more arguments for example to define the frequency of data, format of data and resolution of the data. To learn more about the arguments, you could have a look at this file on the GitHub to learn how to define the data frequency, resolution and more.

Then we can just simply start the pipeline by passing the config object.

pipe.start(cfg);

Finally we need a loop to fetch data and do whatever we need with it.

while (true)

{

rs2::frameset frameset = pipe.wait_for_frames(1000);

if (rs2::motion_frame accel_frame = frameset.first_or_default(RS2_STREAM_ACCEL))

{

rs2_vector accel_sample = accel_frame.get_motion_data();

std::cout << "Accel:" << accel_sample.x << ", " << accel_sample.y << ", " << accel_sample.z << std::endl;

}

if (rs2::motion_frame gyro_frame = frameset.first_or_default(RS2_STREAM_GYRO))

{

rs2_vector gyro_sample = gyro_frame.get_motion_data();

std::cout << "Gyro:" << gyro_sample.x << ", " << gyro_sample.y << ", " << gyro_sample.z << std::endl;

}

} As you can see the data is fetch as a rs2::frameset object. Here we just print out the IMU data to stdout. wait_for_frameis a blocking call and has a timeout argument and if the data is not ready by the timeout, it throws an exception.

The complete code would look like this as a google test code.

#include <librealsense2/rs.hpp>

#include <iostream>

int main()

{

rs2::pipeline pipe;

rs2::config cfg;

cfg.enable_stream(RS2_STREAM_ACCEL);

cfg.enable_stream(RS2_STREAM_GYRO);

pipe.start(cfg);

while (true) // Application still alive?

{

rs2::frameset frameset = pipe.wait_for_frames(1000);

if (rs2::motion_frame accel_frame = frameset.first_or_default(RS2_STREAM_ACCEL))

{

rs2_vector accel_sample = accel_frame.get_motion_data();

std::cout << "Accel:" << accel_sample.x << ", " << accel_sample.y << ", " << accel_sample.z << std::endl;

}

if (rs2::motion_frame gyro_frame = frameset.first_or_default(RS2_STREAM_GYRO))

{

rs2_vector gyro_sample = gyro_frame.get_motion_data();

std::cout << "Gyro:" << gyro_sample.x << ", " << gyro_sample.y << ", " << gyro_sample.z << std::endl;

}

}

return 0;

} Fetch vs. Callback

The Realsense SDK has two mechanisms to retrieve data from the cameras. In the previous section, we learned how to use the fetch mechanism where we wait for the data to become ready. The second way of accessing data is to pass a callback function to the library and the callback is being called whenever the data is ready. So first we need to define the callback. The callback function should accept the const rs2::frame &frame as argument. Here is an example callback.

auto callback = [&](const rs2::frame &frame)

{

if (frame.is<rs2::frameset>())

{

std::cout << "Depth data" << std::endl;

} else if (frame.is<rs2::motion_frame>())

{

auto motion = frame.as<rs2::motion_frame>();

const auto &stream_type = motion.get_profile().stream_type();

std::cout << "gyro: " << (stream_type == RS2_STREAM_GYRO)

<< ", accel: " << (stream_type == RS2_STREAM_ACCEL) << std::endl;

} else if(frame.is<rs2::pose_frame>())

{

std::cout << "pose frame" << std::endl;

}

}; as function like auto motion = frame.as<rs2::motion_frame>(); to read the data. The first case is for reading Depth data (rs2::frameset), the second is for reading the IMU data (rs::motion_frame) and the third case is to read the pose data (rs2::pose_frame). To learn more about these frame types, you might have a look at rs_frame.hpp file on GitHub.

We need to pass this callback to the start function of the pipeline object. Here the complete code would look like. #include <librealsense2/rs.hpp>

#include <iostream>

#include <chrono>

#include <thread>

int main(){

auto callback = [&](const rs2::frame &frame)

{

if (frame.is<rs2::frameset>())

{

std::cout << "Depth data" << std::endl;

} else if (frame.is<rs2::motion_frame>())

{

auto motion = frame.as<rs2::motion_frame>();

const auto &stream_type = motion.get_profile().stream_type();

std::cout << "gyro: " << (stream_type == RS2_STREAM_GYRO)

<< ", accel: " << (stream_type == RS2_STREAM_ACCEL) << std::endl;

} else if(frame.is<rs2::pose_frame>())

{

std::cout << "pose frame" << std::endl;

}

};

rs2::pipeline pipe;

rs2::config cfg;

cfg.enable_stream(RS2_STREAM_ACCEL);

cfg.enable_stream(RS2_STREAM_GYRO);

cfg.enable_stream(RS2_STREAM_DEPTH);

std::this_thread::sleep_for(std::chrono::milliseconds(500));

pipe.start(cfg, callback);

while (true){}

return 0;

} Now if you see the while loop is empty and all of the work is done in the callback.

Multiple Realsense Sensors

If we have multiple Realsense Cameras like our project, we need multiple objects of rs2::pipeline and rs2::config , as many as cameras. We use rs2::context ctx; object to query all of the Realsense cameras and then set up their pipeline and configs accordingly. Here is an example code of doing that for D435 and T265 sensors with callback mechanism.

{

auto callback = [&](const rs2::frame &frame)

{

// callback code as before

};

rs2::context ctx;

rs2::pipeline pipe_t265;

rs2::config rs_cfg_t265;

rs2::pipeline pipe_d435;

rs2::config rs_cfg_d435;

for (auto dev : ctx.query_devices())

{

std::cout << dev.get_info(RS2_CAMERA_INFO_NAME) << std::endl;

if (strcmp(dev.get_info(RS2_CAMERA_INFO_NAME), "Intel RealSense D435") == 0)

{

rs_cfg_d435.enable_stream(RS2_STREAM_DEPTH); // , 256, 144, RS2_FORMAT_Z16, 90

}

else if (strcmp(dev.get_info(RS2_CAMERA_INFO_NAME), "Intel RealSense T265") == 0)

{

rs_cfg_t265.enable_stream(RS2_STREAM_ACCEL);

rs_cfg_t265.enable_stream(RS2_STREAM_GYRO);

rs_cfg_t265.enable_stream(RS2_STREAM_POSE);

}

}

pipe_d435.start(rs_cfg_d435, callback);

pipe_t265.start(rs_cfg_t265, callback);

while (true)

{

}

} Realsense and ROS 2

This blog post is already very wrong and also I haven’t finished my Realsense node, but you can fine the code on the GitHub page here. I will update the post later.

Please leave me any questions, feedback or comments in the comment section below.